Automated Fruit Spoilage Analysis via Reflectance Imaging and Machine Learning

Grade 12

Presentation

Problem

Background:

Global food insecurity is a prevalent issue in today’s society, impacting 1 in 11 people worldwide. In order to combat this, organizations such as UNICEF have attempted to incorporate public-private partnerships and upcycle food; however, these require significant funding and ample time to integrate. An issue that these initiatives often encounter deal with global food waste. A report from 2022 estimated that around 1.05 billion tonnes worth of food in retail, food service, and household sectors combined were labelled as waste. Ultimately raising concern, for regions across the globe struggle with food insecurity. More than a billion tonnes worth of food are often discarded prematurely or are mislabelled as edible despite being past due. These issues stem from the reliance on traditional detection methods which use static expiration dates rather than freshness, contingent on undetected bacterial growth, and are subjective as a result of visual food inspection. Such factors may lead to inefficient, non-scalable, and lethargic analysis that potentially hinder the advancements of modern spoilage classification. These shortcomings pose economical, environmental, humanitarian, and health-related risks to everyday consumers. Current detection methods tend to be expensive, and are ill-suited for large-scale analysis. The overall lack of accurate and automated methods for detecting perishable produce directly impacts food waste and economical losses. Food wastage results in production inefficiency, creating imbalances in allocated funds that could have potentially addressed other global issues. As well as modifications in food distribution that prioritize countries facing food insecurity. Additionally, up to 8–10% of global greenhouse gas emissions are linked to both food loss and wastage, exacerbating overall environmental impact. Ultimately, the primary issue is the absence of an accurate and inexpensive solution to determine the spoilage level of foods in real-time to classify them as fresh or spoiled.

Research Question:

How can a real-time multi-wavelength imaging system combined with AI detection effectively classify spoilage in pre-packaged fruits?

Hypothesis:

A real-time multi-wavelength reflectance imaging system combined with AI will be able to effectively detect and classfiy spoilage in pre-packaged fruits with a higher level of accuracy and efficiency than traditional spoilage detection methods, such as manual inspection and expiration date reliancy. The use of such contraption will lead to a cost-effective and reliable alternative.

Method

In order to address this issue, our project explores developing a contraption that incorporates reflectance-based imaging and machine learning to detect spoilage in real-time. There are three main components of this innovation: the reflectance-based imaging system composed of three types of LEDs, a spinning disc platform and auto-capture camera software for the automated integration, and the use of convolutional neural networks for spoilage classification.

1. LED Reflectance Imaging System

Reflectance-based imaging is a type of imaging technique that incorporates illuminating an object and capturing back-scattered light through the use of a camera, in order to gain insight on the qualities of food. There are various ways light can interact with the object, it can be either absorbed, scattered, or reflected. Reflectance data is then acquired and patterns can be observed based on the level of spoilage. In the case of this prototype, it is vital to note that the dataset is being limited to Canada’s widely eaten fruits only and that the LED rotation is limited to three colors. The types of LEDs chosen for this project involved: Near-Infrared, Orange, and Blue. They were chosen based on their difference in terms of wavelength from one another, the information relayed about a fruit’s spoilage level, and my accessibility to each material.

Near-infrared Radiation (NIR) was primarily chosen due to its penetration ability, which is capable of transcending past surface-level contamination. As a result of its ability to infiltrate past the skin of the fruit, internal spoilage detection is permitted while providing non-invasive results. Characteristics such as moisture content, microbial growth, and degradation of structure in specific areas of the sample result in darker patches.

Orange on the other hand was chosen in contrast to NIR to detect surface level spoilage. Due to its surface-level characteristics, it interacts well with fruit skin, permitting contrast enhancement between areas of spoilage, specifically dark patches. Molding and oxidation alters pigmentations on the surface skin, providing surface-level insight.

Lastly, blue was chosen for its ability to interact with microbial activity, which produces natural fluorescence where these are present. Excitation as a result of blue light allows for bacterial detection. This allows for detection in moisture changes and bacterial growth, permitting higher contrast between spoilage.

The lighting was sequenced one light at a time in order to gain optimal results, meaning that pictures were taken for one light rather than simultaneously shining the fruit with all bands of light. Each LED was individually set to maximum brightness on the dial which controls brightness levels in order to remain constant. As a result of having only one light source, each light’s cord had to be switched out from the source manually. In order to maintain lighting consistency, stands were built and placed at fixed positions above the fruit. Each fruit to be observed was placed in a dark box-like apparatus with a black curtain acting as a lighting control apparatus. Draping the curtain was vital to ensuring no outside light got onto the specimen’s surface and altered the results captured by the camera. Shells made out of white cardboard were additionally incorporated as white surfaces reflect all wavelengths of visible light. In order for the light shining on the fruit to be spread out evenly, each crevice would have to be uniformly lit, hence the shells.

To model real-world scenarios, packaging was incorporated. Fruits such as apples, lemons, and oranges tend to come wrapped when bought in bulk, whereas bananas typically do not. As a result, the fruits were imaged both with and without wrapping. In order for the reflectance-based imaging to work, the package had to be transparent in order to gain surface insight. Potential issues however arose as shiny surfaces such as the plastic reflected excessive light into the camera, thus interfering with the light being reflected from the actual fruit. To tackle this, the light source had to be positioned in order to acquire the least amount of reflection.

2. Automated Integration

Rotating Spinning Disc Platform:

In order to improve accuracy of the prototype, a spinning disc was incorporated to get images of a singular fruit at various sides. This was done in order to examine the fruit as a whole, rather than one sector of its surface.

The disc was built using the same cardboard-like material used to build the shells. It was cut into a bowl-like structure to prevent the fruit from potentially rolling off during rotation. To incorporate the spinning, a servo motor was incorporated under the bowl. Due to a lack of materials, the servo motor had to be used despite only being able to rotate at the following angles: 0°, 90°, and 180°. The motor was attached to an Arduino Uno and coded out using C++ in order to spin at certain increments. In detail, upon pressing run, the motor rotates 90°, waits 3 seconds, rotates 180°, waits 3 seconds, and then rotates once more to 90° on the other side before coming to a complete stop. Upon each pause, an image is captured by the camera sites chosen: Thorlabs and CamoCamera.

Camera Setup:

- Two cameras with independent software were utilized to initially capture images for training the model. One had a lens of achromatic construction, but enhanced resolution, whereas the latter incorporated color with less detail precision. When incorporating real-time analysis, these cameras and their software took the sample images. The softwares Thorlabs and CamoCamera, were chosen specifically for they had integrated timed-capture to emit the need to manually take photos. As well as an auto-save feature which transfers images of the sample into a folder that is linked to the convolutional neural network for real-time analysis.

3. AI Model

There are various types of machine learning algorithms, each catered to achieving a specific task. Since our project involved recognizing patterns in images to detect food spoilage, we opted for a Convolutional Neural Network (CNN). This is a type of neural network optimized for image classification. CNNs are inspired by the way the human brain processes visual information. They consist of layers of connected nodes, which mirror the neurons in our brain. These fundamental processing units are organized into input, hidden, and output layers. The input layer receives image data—specifically, the multi-wavelength reflectance images captured under blue, orange, and NIR lighting. The hidden layers extract and process important features from these images, such as texture changes, discoloration, or surface patterns that indicate spoilage. The output layer then predicts the spoilage classification, such as “fresh,” “early spoilage,” or “fully spoiled.” CNNs are especially effective because they automatically learn which features are important for distinguishing between different spoilage levels. They do this by reducing the pixel size of the image, then cutting up the image into smaller digestible portions for it to understand. The CNN then looks for key aspects of the image to recognize key differences between different classifications and detect patterns like color shifts or texture irregularities. Additionally, at the beginning of training, the CNN starts with randomized weights. These indicate the influence one node’s output has on the next input. As the model is trained on labeled food images, it gradually adjusts these weights to improve accuracy and correctly classify spoilage levels. Each class is distinguished by large amounts at the beginning of its learning. As it reaches the correct weights however, it begins to move by smaller increments to incorporate fine tuning of the weights. These represent the connections between two, indicating the influence one node’s output has on the next input. This ability to learn visual patterns without manual feature extraction makes CNNs ideal for real-time food spoilage detection in our project. The network is thus integrated into our real-time imaging system, allowing it to analyze reflectance images and instantly classify the food's spoilage status.

Analysis

Link to Project Code:

https://github.com/KEVK-05/CYSF-Project.git

For each fruit, we had a combination of gray and colored images that totalled up to around 650~ total pictures. In an attempt to make it more practical for in-store use, we imaged fruits both with and without packaging (except bananas which typically come unpackaged in bundles). Notable characteristics were noticed for each fruit as depicted in the results below:

Orange Light:

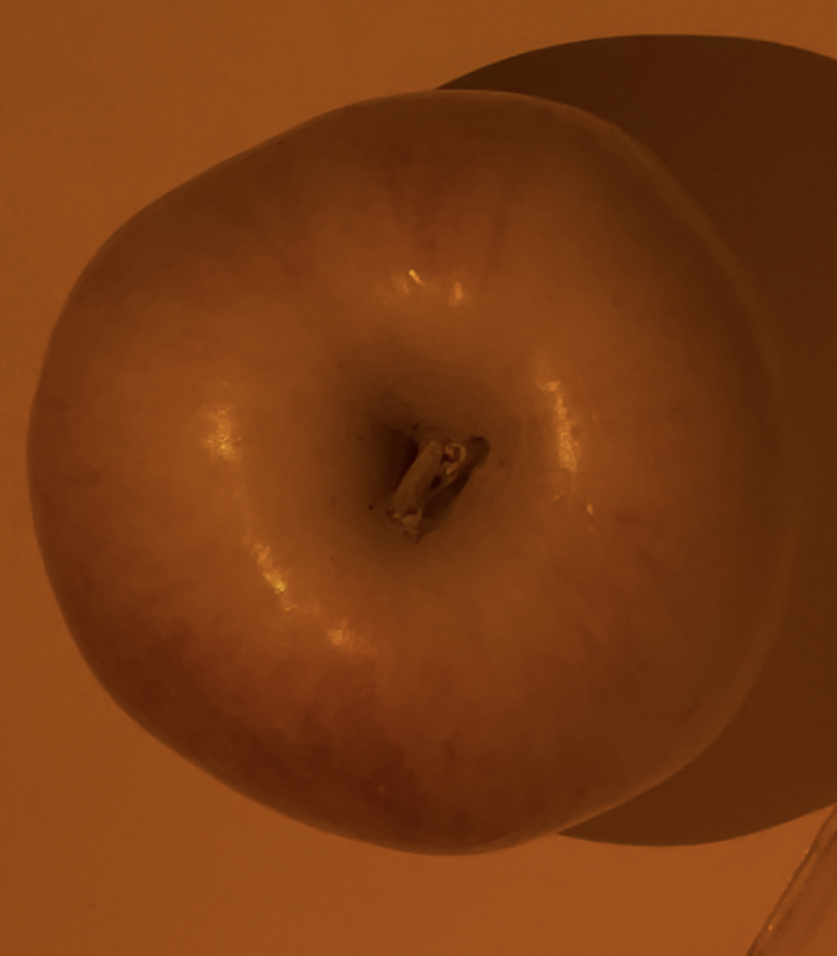

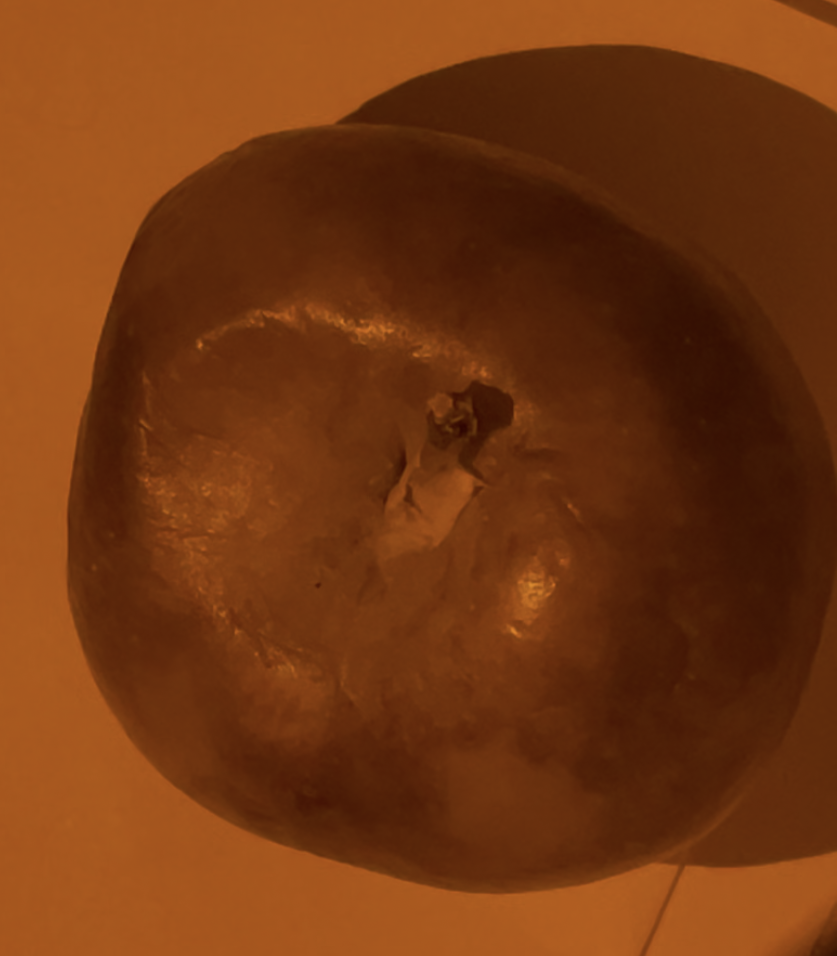

As predicted, the orange LED outlined surface spoilage. On the very left, the image of the fresh apple appears lighter in color, whereas spoiled image on the right appears darker. Browning and wrinkles are highlighted when subject to orange light.

Near-Infrared Light:

For fruits subject to NIR, internal spoilage is evidenced in terms of shade differences. A comparison can be made between the a banana subject to NIR versus blue light. The first image shows a more even shade, whereas the latter depicts an array of shade colors throughout the skin as evident in certain patches lighter gray and darker.

Blue Light:

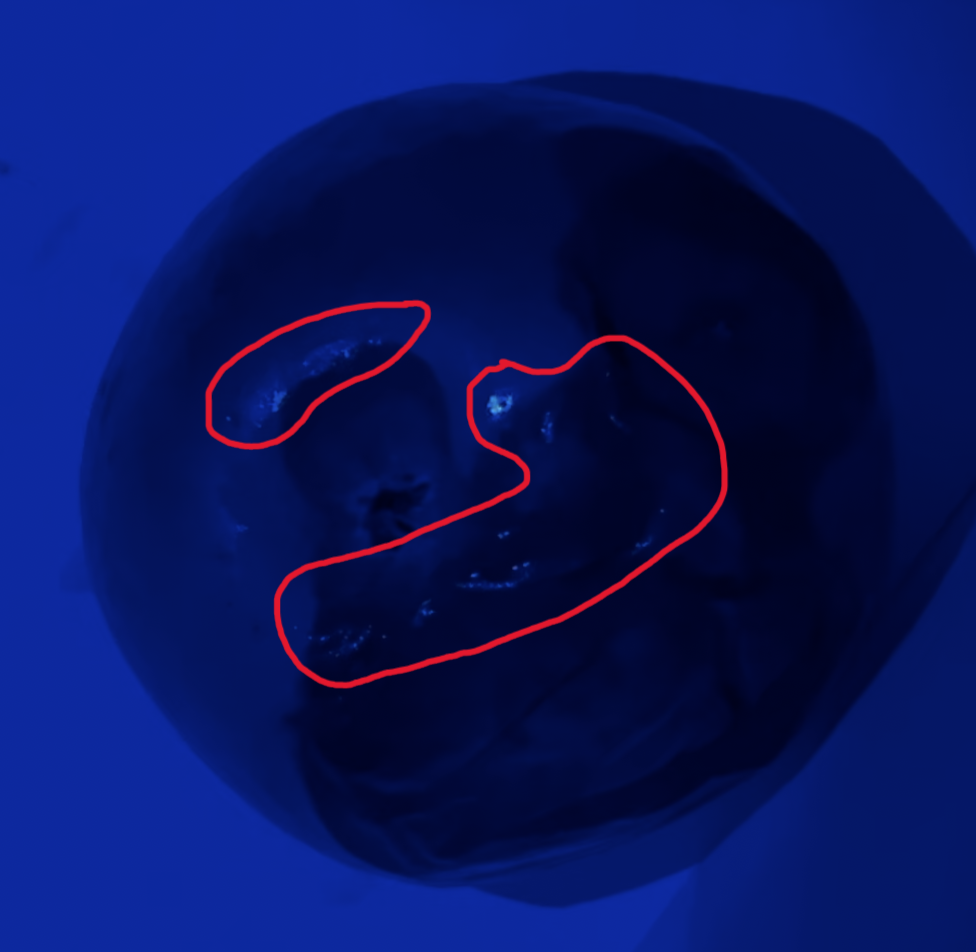

Under the blue light, evidence of flourescence was detected specifically in the spoiled apple. The highlighted areas in the image below represent microbial activity which fluorescences under blue light.

Model Analysis:

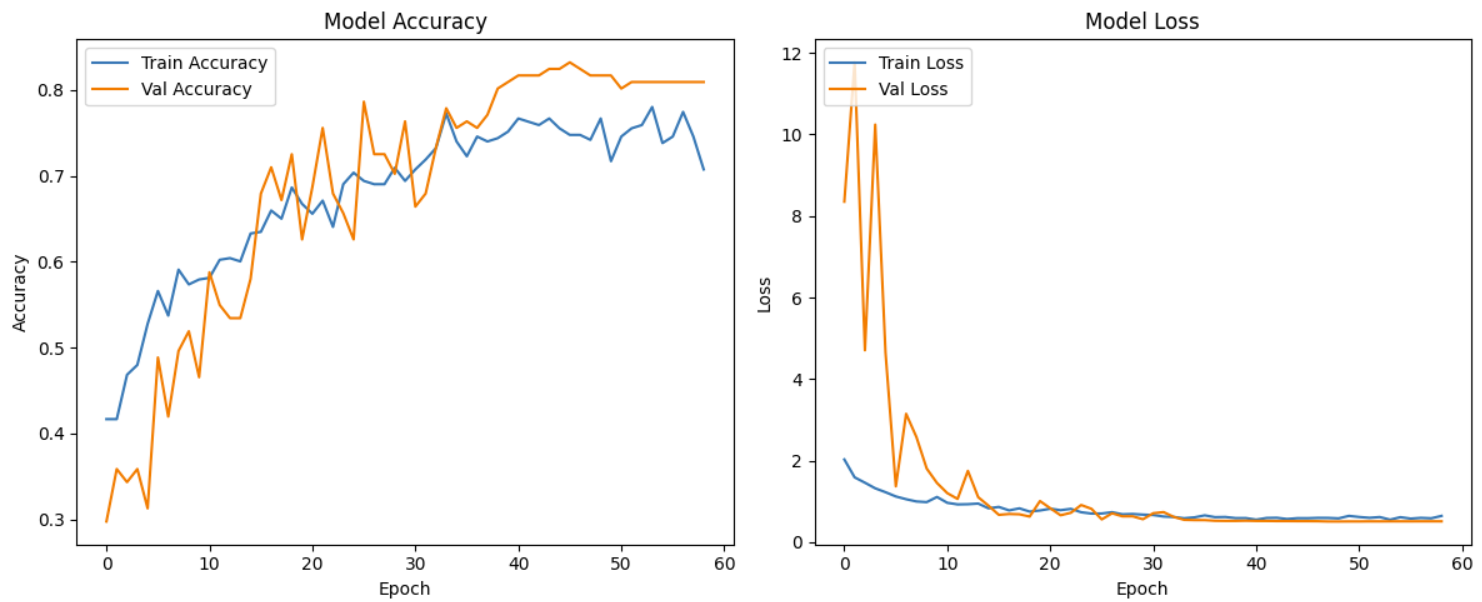

CNN came out well with an 80% accuracy rate. However, initial stages gave results as low as 8%, while simultaneously showing as high as 75%. The accuracy jump from these two all came down to one major aspect as explained prior. CNN created weights that could not accurately fit the data. It randomly selected the weights, and it was either a hit or a miss. Most of the time CNN would be a miss. However, adding the new weightage system created a CNN that could actually detect spoilage. Now the spoilage tests work much better than the original tests. The CNN has a high certainty of being correct in identifying whether the fruit is spoiled.

The following graphs depict the models accuracy and loss. The model accuracy graph is what is used to interpret the results. In terms of accuracy, the model is seen to progressively increase, but eventually falls as evidenced in its training information. However, the best of the models can be taken. The val accuracy on the otherhand, is the accuracy of the model based on images it has never seen before. At early epochs it is much lower, depicting it's attempt to learn by familiarizing itself with new information. Eventually, we can see that it is higher than the training accuracy, depicting its precision for new information that is has not previously encountered. The graphs do face some fluctuations in the middle, but towards the end, it gains relative stability. Additionally at the end mark, we can see around 60 epochs, was not as high as around 40 epochs. This is a result of it getting some right due to guessing.

Conclusion

From the experiment, we believe high success was achieved in creating an AI that is capable of recognizing fruit spoilage. The algorithm trained very quickly, generally in under 50 epochs, and reached an impressive accuracy score in both the test and its training.

However, there were quite a few challenges involved when constructing the code.

The first major problem was the program was completely failing in the tests. The CNN was originally tested for a different and simpler dataset with an accuracy of around around 80 - 90% scoring similarly in the test. However, while our CNN would score around 80% on the training, a score of only 8% was shown on the test. This meant that the CNN was overfitting and incapable of interpreting new images given. While accuracy is a good indicator of how well the CNN is doing for its training, data validation accuracy or val_accuracy used in code measures how well it does on data it had not seen. In an attempt to increase accuracy, we added a monitor to the val_loss that would reduce the learning rate of the AI, modifying how much the weights of the AI would change by. Resulting in a significant change in the CNN which additionally changed the field in the other way. Data recognition became much more refined and the machine was able to score 80% on the test.

Another potential issue revolved around our project budget. Despite a professor granting us permission to use his lab, we still lacked certain parts to adequately build the real-time analysis. We only had access to one light source, hence why rather than an automating the LEDs, we are forced to manually switch it out after imaging under one light. Additionally, we were only lent the Arduino Uno and standard servo motor. Using the standard only permitted 90 to 180 degreee rotations, rather than 360 degrees with a continuos one. As a result, we were only able to use half of bigger fruit images when running them through the model.

Possible sources of improvement for the model would be to increase the amount of collected data, in order to in turn, increase overall accuracy of the CNN. An ideal CNN has around 80% in accuracy rate, while adequate for a prototype such as ours, an accuracy of near 100% would be more ideal. This could be accomplished by altering existing features to adapt the CNN better or adding new features that the CNN would benefit from. Another would be to acquire more materials to adequately build the machine to run better in real-time.

The project that we have created creates a base layer for what more powerful CNNs can do. We have only built a basic level CNN alongside basic lab work to collect data with a limited budget and time. However, with greater financing and access to a wider variety of resources, our project could be enhanced further.

Citations

Canada, A. and A.-F. (2024, July 22). Statistical overview of the Canadian fruit industry, 2023. Agriculture and Agri-Food Canada. https://agriculture.canada.ca/en/sector/horticulture/reports/statistical-overview-canadian-fruit-industry-2023

Cheng, J.-H., & Sun, D.-W. (2015). Rapid and non-invasive detection of fish microbial spoilage by visible and near infrared hyperspectral imaging and multivariate analysis. LWT - Food Science and Technology, 62(2), 1060–1068. https://doi.org/10.1016/j.lwt.2015.01.021

El Najjar, N., van Teeseling, M. C., Mayer, B., Hermann, S., Thanbichler, M., & Graumann, P. L. (2020). Bacterial cell growth is arrested by violet and blue, but not yellow light excitation during fluorescence microscopy. BMC Molecular and Cell Biology, 21(1). https://doi.org/10.1186/s12860-020-00277-y

Elmasry, G., Kamruzzaman, M., Sun, D.-W., & Allen, P. (2012). Principles and applications of hyperspectral imaging in quality evaluation of Agro-Food Products: A Review. Critical Reviews in Food Science and Nutrition, 52(11), 999–1023. https://doi.org/10.1080/10408398.2010.543495

Fodor, M., Matkovits, A., Benes, E. L., & Jókai, Z. (2024). The Role of Near-Infrared Spectroscopy in Food Quality Assurance: A Review of the Past Two Decades. Foods, 13(21), 3501. https://doi.org/10.3390/foods13213501

Gitelson, A. A., Gritz †, Y., & Merzlyak, M. N. (2003). Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. Journal of Plant Physiology, 160(3), 271–282. https://doi.org/10.1078/0176-1617-00887

Guillermin, P., Camps, C., & Bertrand, D. (2005). Detection of bruises on apples by near infrared reflectance spectroscopy. Acta Horticulturae, (682), 1355–1362. https://doi.org/10.17660/actahortic.2005.682.182

Huang, H., Liu, L., & Ngadi, M. (2014). Recent developments in hyperspectral imaging for assessment of Food Quality and safety. Sensors, 14(4), 7248–7276. https://doi.org/10.3390/s140407248

Nikolava, K., Zlatanov, M., Eftimov, T., Brabant, D., Yosifova, S., Halil, E., Antova, G., & Angelova, M. (n.d.). Fluorescence spectra of mixture of sunflower and olive oils with... | download scientific diagram. Fluoresence Spectra From Vegetable Oils Using Violet And Blue Ld/Led Exitation And An Optical Fiber Spectrometer. https://www.researchgate.net/figure/Fluorescence-spectra-of-mixture-of-sunflower-and-olive-oils-with-excitation-at-l-425-nm_fig5_263368577

Qu, J.-H., Liu, D., Cheng, J.-H., Sun, D.-W., Ma, J., Pu, H., & Zeng, X.-A. (2015). Applications of near-infrared spectroscopy in Food Safety Evaluation and control: A review of recent research advances. Critical Reviews in Food Science and Nutrition, 55(13), 1939–1954. https://doi.org/10.1080/10408398.2013.871693

United Nations Environment Programme. (2024). (rep.). Food Waste Index Report 2024. Think Eat Save: Tracking Progress to Halve Global Food Waste. Retrieved 2025, from https://wedocs.unep.org/20.500.11822/45230.

WHO. (2024). Hunger numbers stubbornly high for three consecutive years as global crises deepen: un report. WHO. Retrieved 2025, from https://www.who.int/news/item/24-07-2024-hunger-numbers-stubbornly-high-for-three-consecutive-years-as-global-crises-deepen--un-report.

Wu, D., & Sun, D.-W. (2013). Potential of time series-hyperspectral imaging (TS-hsi) for non-invasive determination of microbial spoilage of Salmon Flesh. Talanta, 111, 39–46. https://doi.org/10.1016/j.talanta.2013.03.041

Acknowledgement

We would like to acknowledge Dr. Grant for lending us his lab to collect data and supplying us with the neccessary materials to carry out the experiment. Additionally, we would like to extend our thanks to Mrs. Fanying for coordinating her times to work with ours, permitting us lab access during work hours. Lastly, we would like to thank Mrs. Pollard for aiding us throughout the registration and providing us with support throughout the whole process.