Using AI to Screen and Detect Skin Cancer

Grade 9

Presentation

Problem

Introduction

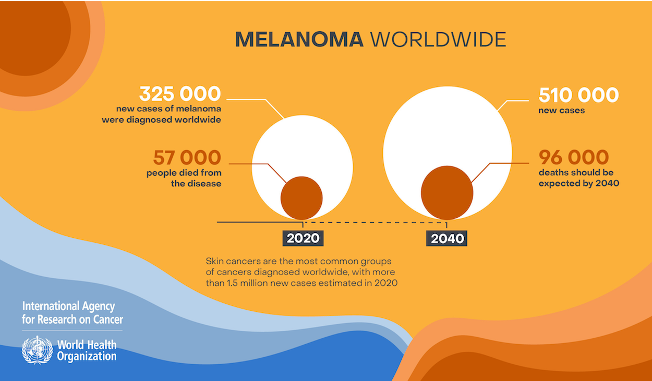

Skin cancer is one of the most common types of cancer. About one-third of all new cases of cancer in Canada are skin cancers, and the rate continues to rise (Canada.ca, 2023). Advanced melanoma has had poor survival rates historically, proving to have a high fatality. There are two main types of skin cancers: melanoma and non-melanoma. Non-melanoma skin cancer includes basal cell carcinoma and squamous cell carcinoma (Canadian Cancer Society), but these are less aggressive and less common in young people (WebMD, 2022). Melanoma skin cancers are more aggressive and have a higher metastatic potential. "Melanoma is the most serious form of skin cancer. In the United States, it is the fifth most common cancer in males and females; its incidence increases with age," (Swetter & Geller, 2023)

The statistics of melanoma. Taken from the World Health Organization International Agency for Research on Cancer

Problem

Advanced melanoma skin cancer is highly fatal and can invade many tissues with its tumours unless it is treated early on. The screening process to identify melanoma is time-consuming, and classifying between benign and malignant tumours takes even longer. This is because it requires biopsies. Many people may be financially unstable, so for insurance to cover the cost of a biopsy, they may have to wait months or even a year for their appointment. In that time, the lesion has the potential to grow and spread to other tissues. Early detection can save many lives of those affected by melanoma skin cancer. Given these challenges, my project is to innovate a solution to the question:

How can we make a real-time object detection program to screen and detect melanoma skin cancer tumours to save lives?

Solution

Leveraging the YOLO V5 (You Only Look Once) deep convolutional neural network (CNN), we aim to screen and classify the presence of various tumour types using a skin cancer dataset collected from the internet. Numerous studies have demonstrated the efficacy of deep CNN models in distinguishing between melanoma and benign nevus. For example, one study tested a novel system using deep CNNs trained on nearly 130,000 clinical and dermoscopic images of pigmented lesions against 21 expert dermatologists. The results showed that the deep CNNs outperformed the dermatologists on average in classifying melanoma versus benign nevus (Swetter & Geller, 2023).

My project proposes a real-time AI object screening system that utilizes a comprehensive dataset of melanoma malignant and benign tumours. This innovation aims to expedite the screening process for identifying skin cancer tumours and classifying their type, enhancing early detection and potentially saving lives.

Background Research

Skin Cancer:

Melanoma is one of the most common types of aggressive and fatal skin cancer, known for its rapid invasion into surrounding tissues. It manifests in two types of tumours: malignant and benign. Malignant cancerous tumors not only invade tissues but also destroy cells within them. Benign tumours, while non-cancerous initially, hold the potential to evolve into pre-malignant states and subsequently transform into malignant tumours, posing a significant threat as they begin to invade and destroy cells.

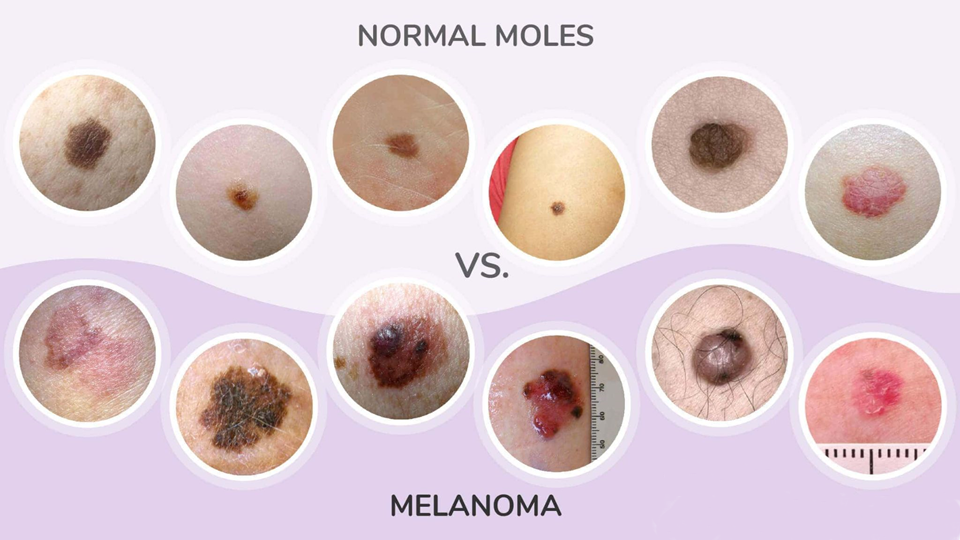

The genesis of melanoma skin cancer is in the melanocytes, the cells responsible for producing melanin. It's noteworthy that melanocytes affected by melanoma can cluster to form moles on the skin. These moles, typically benign tumours, serve as non-cancerous indicators but are crucial in training AI models, especially deep convolutional neural networks (CNN). The visible differences, such as moles, provide essential data points for these models to distinguish between benign and malignant conditions effectively.

An image showing the differences between normal moles and malignant melanoma tumours.

Deep Neural Network:

To create a deep CNN model, many different neural networks are available, including TensorFlow, TensorFlow Lite, TensorRT, and SSD-mobilenet. These all follow the basics of convolutional neural networks, which have multi-stage pipelines. YOLO (You Only Look Once), it utilizes single-shot detection to process images in only a single pass through the neural network, opting for faster detection.

Traditional CNNs use separate models for region proposal and classification, whereas YOLO uses a single, unified model, drastically improving speed and efficiency. I will be using the Jetson Nano, a mini supercomputer manufactured by Nvidia, similar to the Raspberry Pi. However, the Jetson Nano is manufactured specifically for real-time object detection, having an Nvidia CUDA deep neural network-enabled GPU, Nvidia Tegra.

Method

Before I start my project, I need to gather the materials.

| Jetson Nano | $242.38 |

| Camera Module | $16.49 |

| Jumper Wires | $12.69 |

| Red LED light | $1.99 |

To create a real-time AI object detection model, there are three main steps:

1. Annotate the dataset

What is a dataset?

A dataset is a collection of images and text files. These text files contain data on the location of the object in each photo of the dataset. This format of text files is specific to the YOLO neural network, as other neural networks, such as Pascal VOC CNNs, use XML files (Extensible Markup Language).

First, I researched a dataset to find one that would be appropriate for screening melanoma. Using a dataset from Kaggle with 10,000 images, they had two object detection classes: malignant and benign. Using this information, we can annotate the dataset.

I annotated each one using a program called LabelImg, which labels using bounding boxes. Since there are a total of 10,000 images, with 5,000 images for each class, this will make my object detection model very accurate. I labelled all 10,000 images, putting a bounding box on each image to show the program where the lesion is and whether it is malignant or benign.

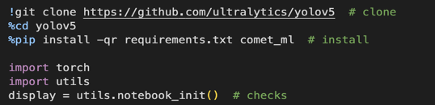

2. Training the Model

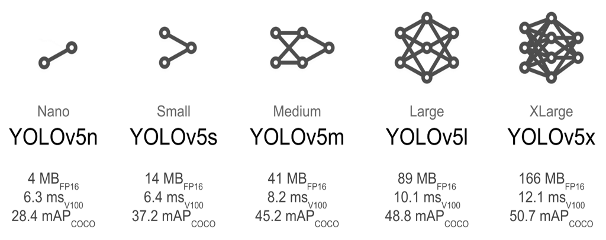

Next, I train my model using the YOLO v5 neural network. To train it locally on my Jetson Nano, I first need to select a pre-trained model and retrain it with my own dataset to get faster results. I chose the second smallest and fastest model available, YOLO v5s. Before I start training, I need to install the prerequisites. To do that, I need to clone the YOLOv5 repository to get the pre-trained models. After that, I need to install comet_ml, which provides training results, through pip (preferred installer program). I then import PyTorch, which is the backbone of the YOLO v5 neural network.

While training the model, I used the YOLO v5 neural network as the base of my project. It serves as the core of AI object detection for my program and can do it at high speeds.

YOLO or You Only Look Once, works faster and more efficiently than traditional convolutional neural networks. This is because it utilizes single-shot detection, processing it by passing it only a single time.

I use the YOLO v5s pre-trained model as the "base" of my project, building many more layers on top using my annotated dataset.

The model trains by looking at each image and the corresponding text file. When training on the dataset, YOLOv5 takes the text file for each image and processes it to figure out where the lesion is in each photo.

The training parameters that I used were 7.2 million, for the YOLOv5s model. From the dataset, there were two directories: test and train. The YOLO neural network trains the model using the train directory, which contains all 10,000 images I annotated. For the training process to complete, it took about 1 day on my home PC.

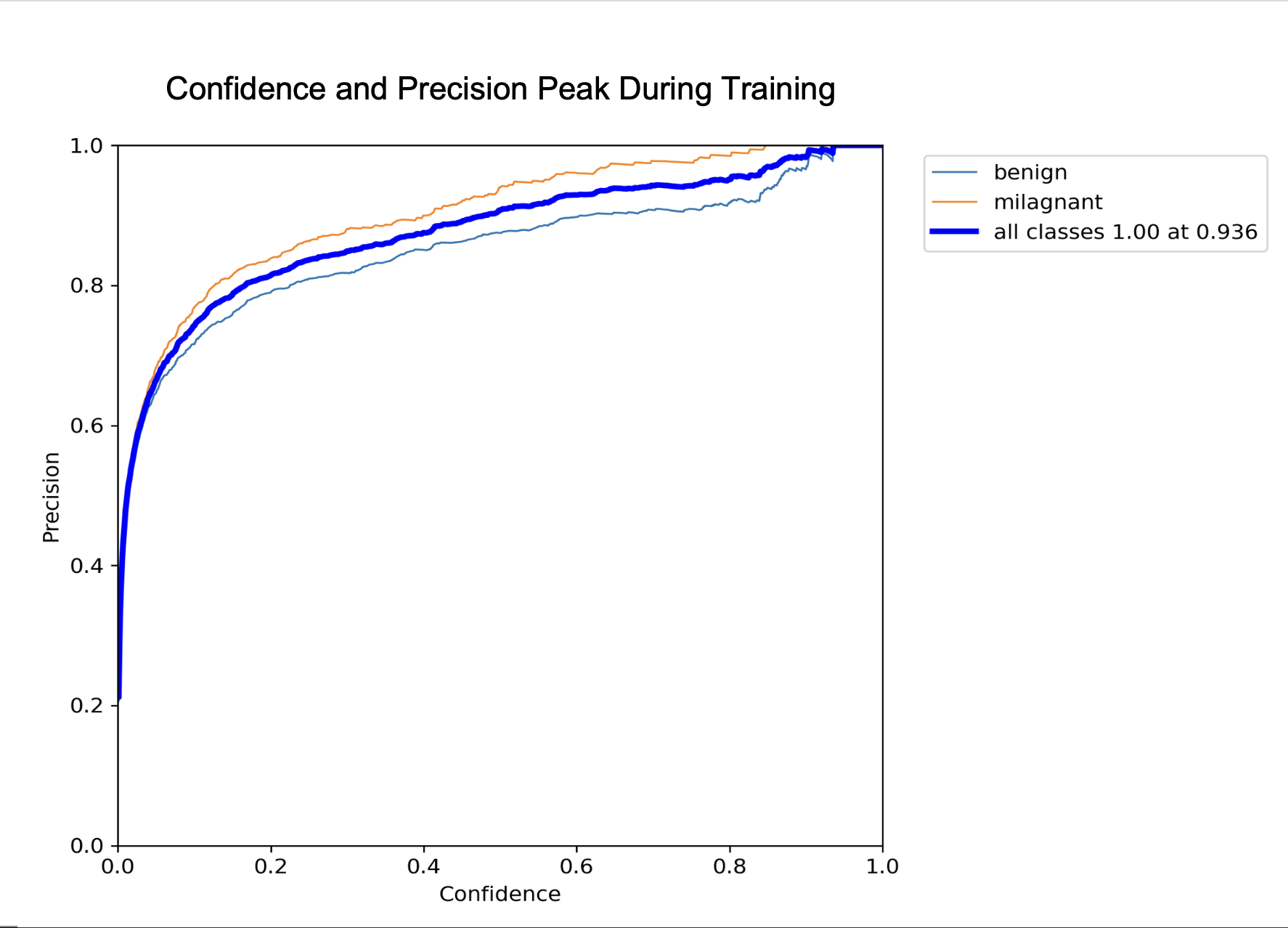

After the model finishes training, YOLOv5 tests and measures the accuracy of the model through various tests. These tests are conducted using images from the test directory, which are not the same as the train directory to ensure it is working properly. One of the tests gathers images that look similar to the trained data, such as an eyeball, and tests the model. Next, it plots various graphs about the confidence, precision, and recall values.

It also saved the best checkpoint, which was the version of the model that had the best speed and accuracy. It is saved as best. pt, which will run our melanoma screening object detection program.

3. Coding the Program

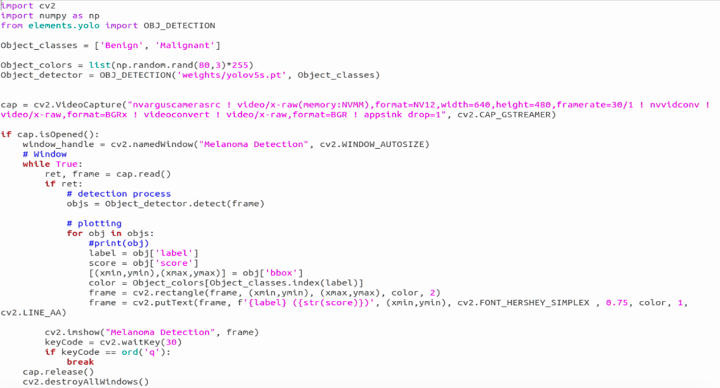

Finally, I code the program to utilize the previously trained model.

Import OpenCV: Start by importing OpenCV to handle image processing and capture live video streams from the camera.

Initialize Classes: Define the object classes 'Benign' and 'Malignant' within the program to classify the different types of melanoma tumours detected by the model.

Set Up GStreamer: Configure the GStreamer pipeline to ensure the video stream is properly formatted and displayed with the correct quality on the screen.

Load YOLOv5 Model: Implement code to load the trained YOLOv5 model ('best.pt') so it can process the incoming video stream in real-time.

Bounding Box Detection: Use OpenCV functions to process the video frames, applying the YOLOv5 model to detect lesions and draw bounding boxes around them.

Display Results: Code the final step to display the annotated video stream live, showing the detected lesions with labels indicating whether they are benign or malignant.

Part of the program I coded. The screenshot was taken directly from the Jetson Nano.

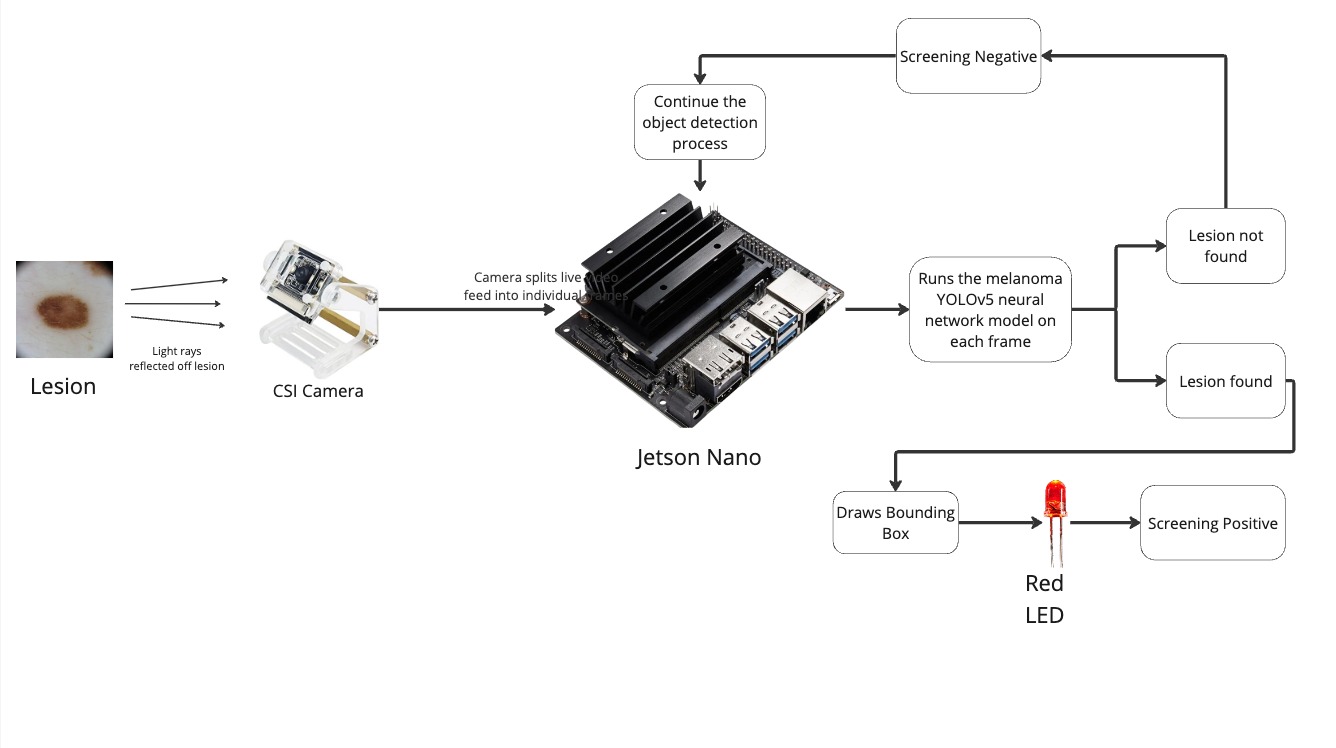

Flowchart detailing how my program works.

Analysis

Trial and Error

During the process of training, there was trial and error. During unsuccessful training attempts, the YOLOv5 neural network would try again, adding more images to train until it achieved the best confidence score, along with the best precision.

When the best confidence score and precision are achieved, it is checkpointed and saved as best.pt, which can be used for deploying onto real-time object detection.

Analysis

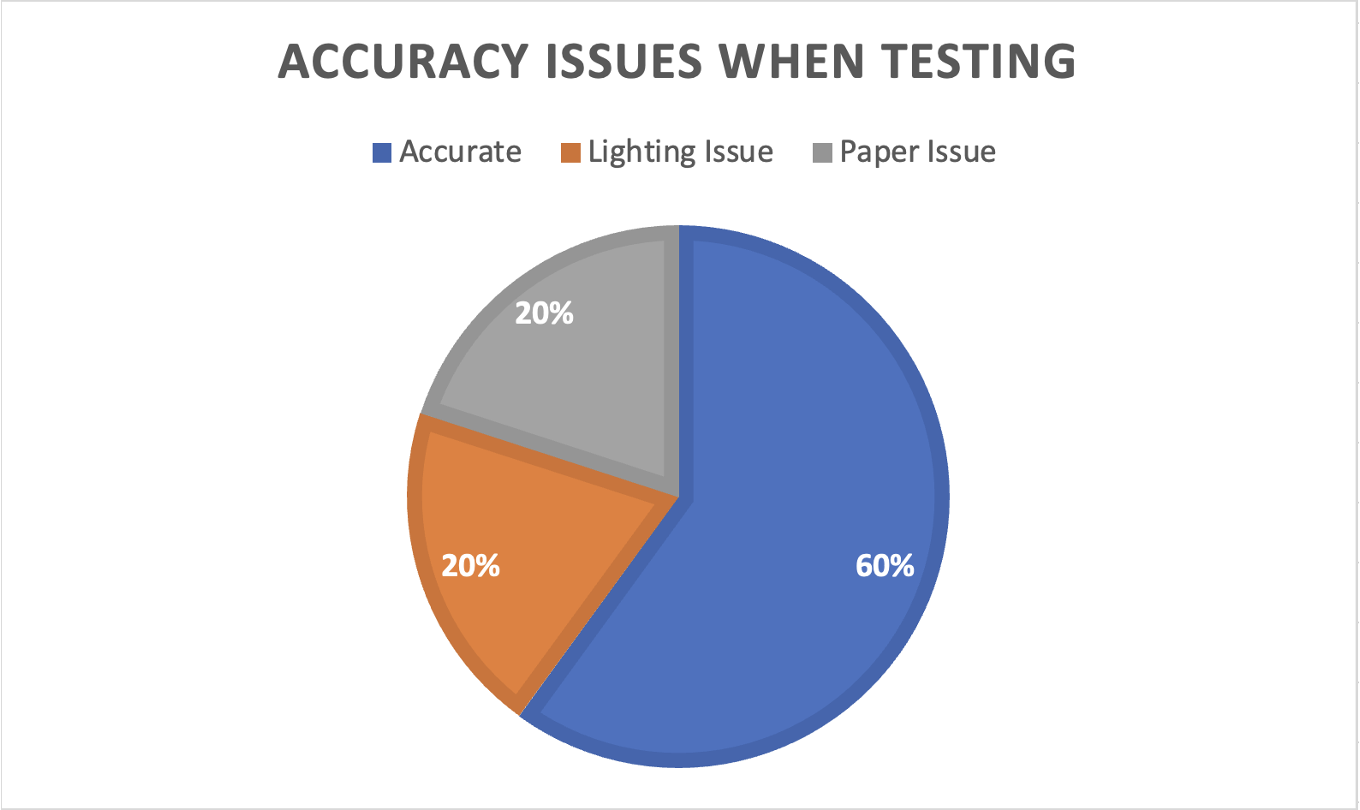

My real-time live YOLO v5 object detection software is accurate; however, it can lead to false positives. This means that benign tumors are sometimes incorrectly classified as malignant cancerous tumours.s. False positives arise from issues such as lighting errors, the angle at which the sheet of paper is held, and the focus of the camera (e.g., blurry focus). I ran a test ten times to determine the issues that caused false positives, using data from the test set to verify that the model was not overfitting.

When the program runs, the object detection software splits up the video frames from the CSI camera and runs the YOLO v5 model on each frame. When an object is detected in the frame, OpenCV plots bounding boxes over the object in the frame, and the LED flashes. This process repeats until the key "Q" is pressed, at which point the program quits and closes.

Conclusion

Conclusion

My real-time melanoma screening and classification software works well and is accurate; however, there are false positive misclassifications, such as classifying benign tumours as malignant or classifying certain shapes as malignant tumours. These are caused by issues such as lighting errors, the angle at which the sheet of paper is held, and the focus of the camera (i.e., blurry focus). However, I discussed with a healthcare professional who works at the Tom Baker Cancer Center about my project. He informed me that false positives are manageable, but false negatives are very serious. "If it is a false negative, things might go unnoticed, which can create more problems. If it is a false positive, it's OK since we can still check it and confirm, 'No, it is not melanoma.' The program should be used as a screening tool, since due to potential false negatives, it cannot diagnose, and healthcare professionals should be the ones actually diagnosing.

Future Improvements

Future improvements that I could implement to my melanoma screening tool are:

1. Mobile App Implementation. Using the trained YOLOv5 neural network, I will implement it into a mobile app for easier, and more accessible screening and identification.

2. Screenshot Feature. When the model detects a tumour, it will take a screenshot and send an email to the doctor’s office, specifying the type of tumour detected.

3. Smart Bandage. With our deep AI, I will implement this into a monitoring device, providing the necessary treatment when required.

Citations

- Canadian Cancer Society / Société canadienne du cancer. (n.d.). Types of non-melanoma skin cancer. Canadian Cancer Society. https://cancer.ca/en/cancer-information/cancer-types/skin-non-melanoma/what-is-non-melanoma-skin-cancer/types-of-non-melanoma

- Jocher, G. (2023, April 13). Train Custom Data. GitHub. https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data

- Melanoma - statistics. Cancer.Net. (2023, August 9). https://www.cancer.net/cancer-types/melanoma/statistics

- Melanoma skin cancer. Cancer Research UK. (2022, December 12). https://www.cancerresearchuk.org/about-cancer/melanoma

- Skin cancer. American Academy of Dermatology. (n.d.). https://www.aad.org/media/stats-skin-cancer

- Skin cancer. Cancer Research UK. (2023, March 22). https://www.cancerresearchuk.org/about-cancer/skin-cancer

- Swetter, S., & Geller, A. (n.d.). Melanoma: Clinical features and diagnosis.

- UpToDate. (n.d.). https://www.uptodate.com/contents/melanoma-clinical-features-and-diagnosis?search=MELANOMA&source=search_result&selectedTitle=1~150&usage_type=default&display_rank=1

- Walidamamou. (2024, February 2). Why yolov7 is better than CNN in 2024 ?. UBIAI. https://ubiai.tools/why-yolov7-is-better-than-cnns/#:~:text=The%20key%20innovation%20of%20YOLO,both%20region%20proposal%20and%20classification

- WebMD. (n.d.). Skin cancer: Melanoma, basal cell and squamous cell carcinoma. WebMD. https://www.webmd.com/melanoma-skin-cancer/skin-cancer

Acknowledgement

I would like to thank the following professionals who provided feedback and recommendations to improve my project.

Dr. Ryan Lewinson, a dermatologist with an MD and PhD in Biomedical Engineering, is recognized for his innovative skincare solutions as the CEO of All Skin Inc.

Dr. Rodel Cenabre is a Family and GP Oncology Associate at Alberta Health Services. He has made significant contributions to patient care and cancer management.

Special thanks to Ms. Bretner, science fair Teacher at the STEM Innovation Academy, for her feedback, and to my parents for their support.