Now You See Me Too

Suhandan Thangadurai

Grade 8

Presentation

Problem

Studies from recent years consistently reveal a troubling pattern, indicating a significantly higher risk of pedestrian collisions, hospitalizations, and fatalities among individuals with various forms of disability. In ten separate studies, strong associations were established between disability and a range of pedestrian road-related incidents, including collisions, self-reported injuries, and hospitalizations. Notably, the odds and risks associated with disability in these studies are alarming, ranging from two to five times higher. This statistical significance not only highlights the increased risk but also emphasizes the substantial practical implications for the safety of disabled pedestrians navigating road environments. Addressing this issue is essential to ensure the well-being and safety of disabled individuals. It emphasizes the urgency for innovative solutions, such as integrating object detection functionality into headphones, to mitigate the heightened risks these vulnerable populations face.

So this project aims to enhance the safety of various communities, such as individuals with visual impairments, pedestrians, children commuting from school, and those who are deaf or hard of hearing, by integrating object detection functionality into headphones. This is achieved by incorporating an ESP32 camera that has been programmed with advanced machine-learning algorithms to recognize objects such as humans, dogs, cars, and bicyclists. Additionally, the headphones are equipped with the ability to display distinct colours when a particular group has been identified.

Method

| Material | Cost/per unit | Units used | Cost |

| ESP-32 CAM | $14.28 | 2 | $28.55 |

| RGB LED | $0.14 | 2 | $0.28 |

| Bluetooth Headphones | $19.97 | 1 | $19.97 |

| Wires | $0.10 | 6 | $0.60 |

| Powerbank | $6.00 | 2 | $12.00 |

| 3D printed shell | $1.96 | 2 | $3.92 |

| Total Cost | $65.32 |

Design/Method

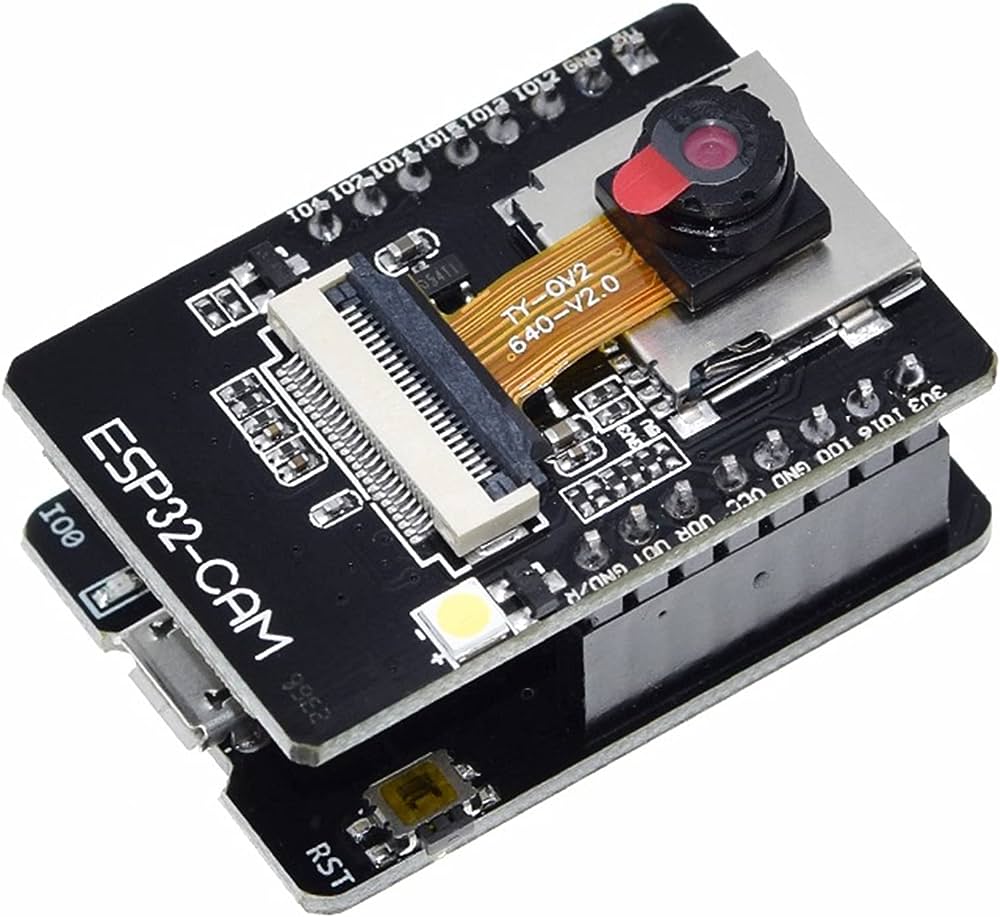

To effectively implement machine learning algorithms for object detection, a programmable camera was a crucial component for the device's development. Two options were considered: the Husky Lens and the ESP32 camera. Despite the Husky Lens' facial recognition capabilities and standard camera size, there were better fits for the project's goal of a cost-effective and compact solution. The Husky Lens was prohibitively expensive, cumbersome, and incompatible with other electronic components due to its unique software. Moreover, its limited facial recognition functionality made it unsuitable for embedding other object detection algorithms.

Consequently, the ESP32-CAM module was selected. The ESP32 camera is a programmable board that can be utilized with various coding software, such as Arduino and OpenCV. It not only uses the same software as other electronic components in this device, such as the RGB and distance sensor but also has GPIO pins that allow direct attachment of these components to the camera. This feature enables a compact and cost-effective solution, eliminating the need for an additional board to control other components, so the ESP32 camera was selected.

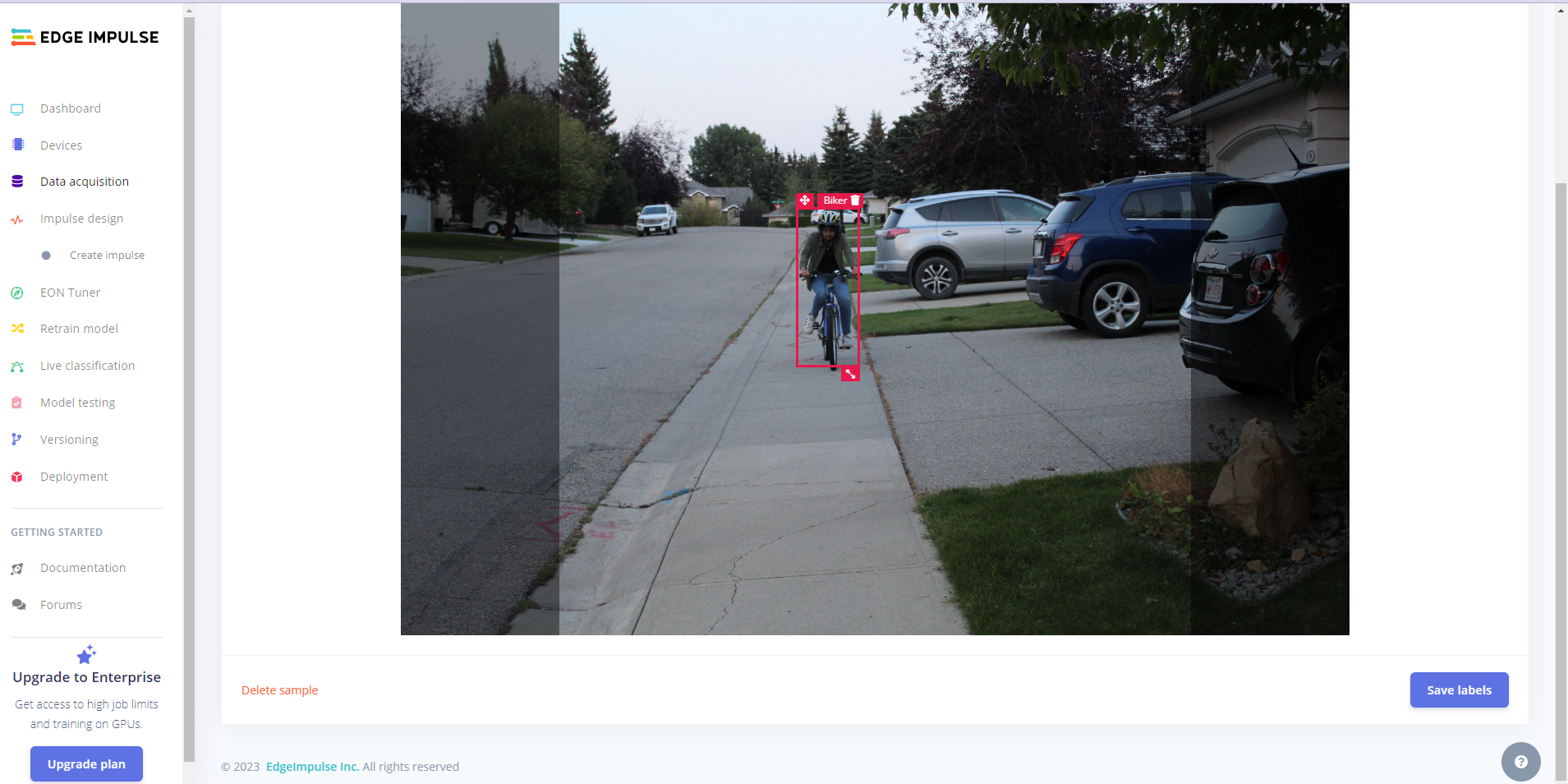

Software that allows the users to create and train a model to detect various objects was needed for the camera to be programmed. In this case, it was needed to detect cars, bikers, dogs, and humans. Various machine-learning platforms were researched. The first ones that were researched were Mediapipe and OpenCV. OpenCV is a well-known library for computer vision that offers a range of image and video processing capabilities. MediaPipe is a machine learning framework although compatible with multiple platforms it is commonly used with OpenCV. MediaPipe also provides several pre-trained models for computer vision applications, including face detection, hand detection, and poses. Yet after further research, it was found that OpenCV and MediaPipe were only used for poses and hand detection and had limited flexibility as there were pre-trained models. Although it did have facial recognition, the goal of the device was to recognize multiple objects, not just faces. Therefore it was found that OpenCV and MediaPipe would not be suitable for this project. After several more hours of researching a software called Edge Impulse was found. Edge Impulse is the leading development platform for machine learning that can be integrated into many software, free for developers, and trusted by enterprises worldwide. The company aims to enable every developer and device maker with the best development and deployment experience for machine learning on the edge, focusing on sensor, audio, and computer vision applications. Edge Impulse is known for its flexibility to start from scratch with zero pre-loaded object detection algorithms. This allowed for a model to be trained from scratch by uploading and labelling images. This software allowed for the seamless integration of machine learning into the device, hence why it was chosen.

Above is part of the training the machine process where you have to label every image for the biker, dog, human and cars.

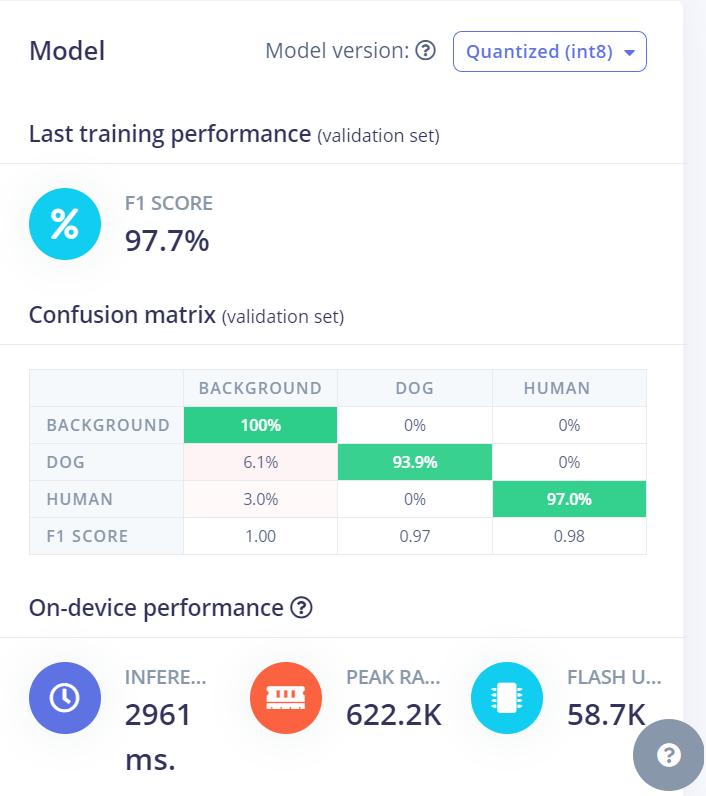

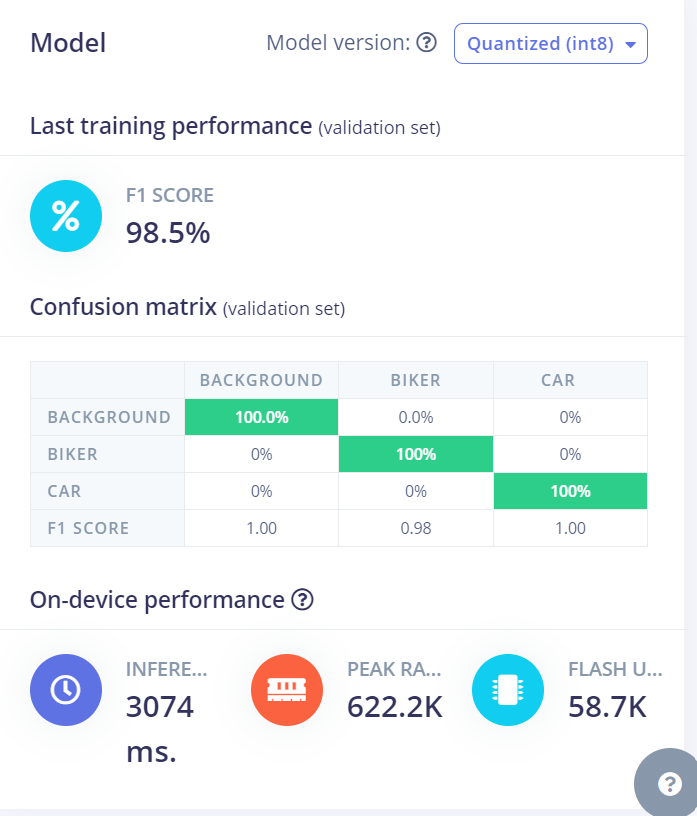

The implemented object detection system, using Edge Impulse, recognizes a variety of items with precision, including persons, dogs, cars, and bicycles. During the testing, the system regularly displayed reliable detection capabilities, improving the safety of vulnerable communities with an accuracy of 98.1% overall, (97.7% accuracy for dogs and humans and 98.5% for biker and cars, so 98.1% overall). The accuracy is based on the F1 score.

The F1 score explained

Imagine you have a bunch of data, and you want a computer program to decide whether something is positive or negative. We care about two things:

- Precision: This is like asking, "When the computer says something is positive, how often is it correct?" The formula for precision is:

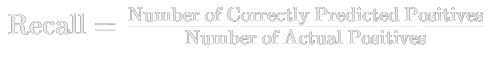

- Recall: This is like asking, "Out of all the actual positive things, how many did the computer manage to find?" The formula for recall is:

Now, the F1 score combines both precision and recall to give an overall measure of how well the computer is doing. The formula for the F1 score is:

It's like finding a balance between how often the computer is right when it says something is positive (precision) and how many positive things it can find overall (recall). If the F1 score is high, it means the computer is doing a good job in both aspects.

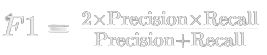

Above image is a screenshot of a section of the code, it took many tries to get the code to work with the camera. In the bottom part of the code is the Serial Monitor, when the ESP32 camera module is hooked up to a computer you can see the exact timestamp of when the object was detected and how sure the machine was that it was infact the object. In this case the machine was 67% sure that it was a Dog that was detcted, which is correct.

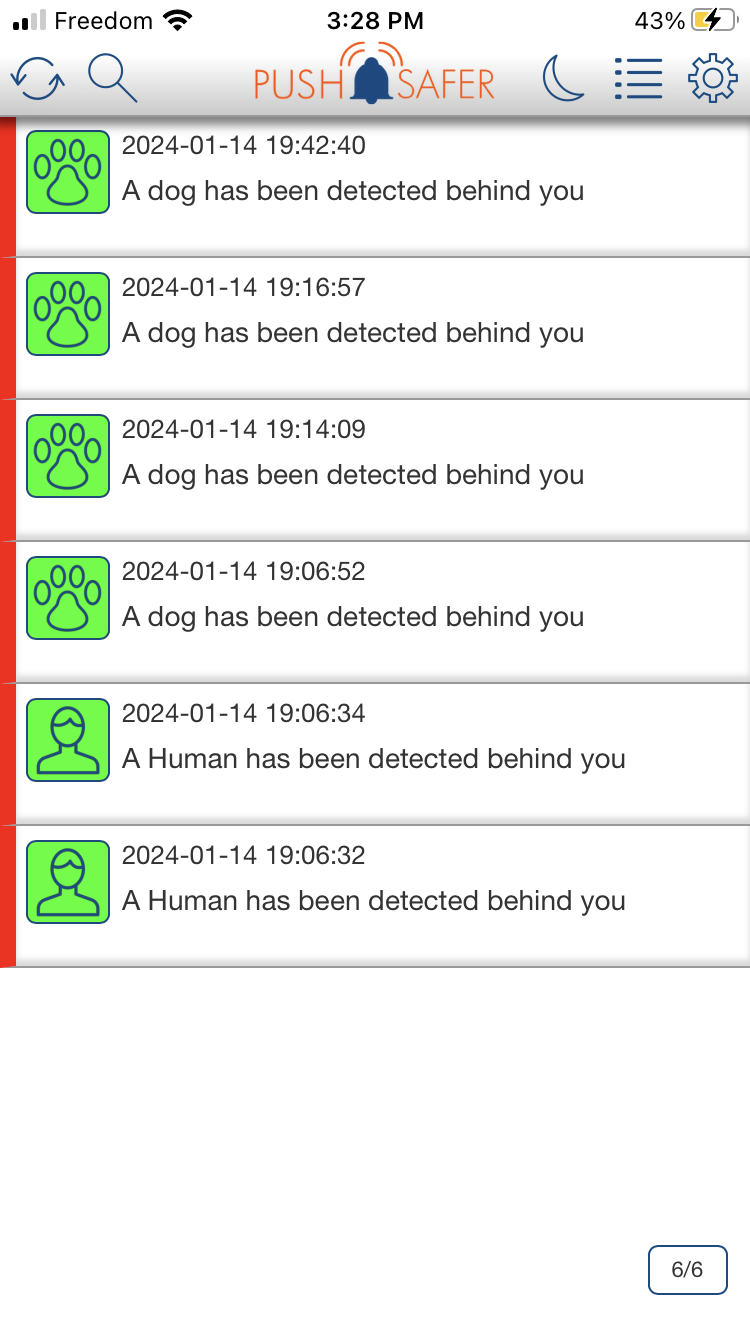

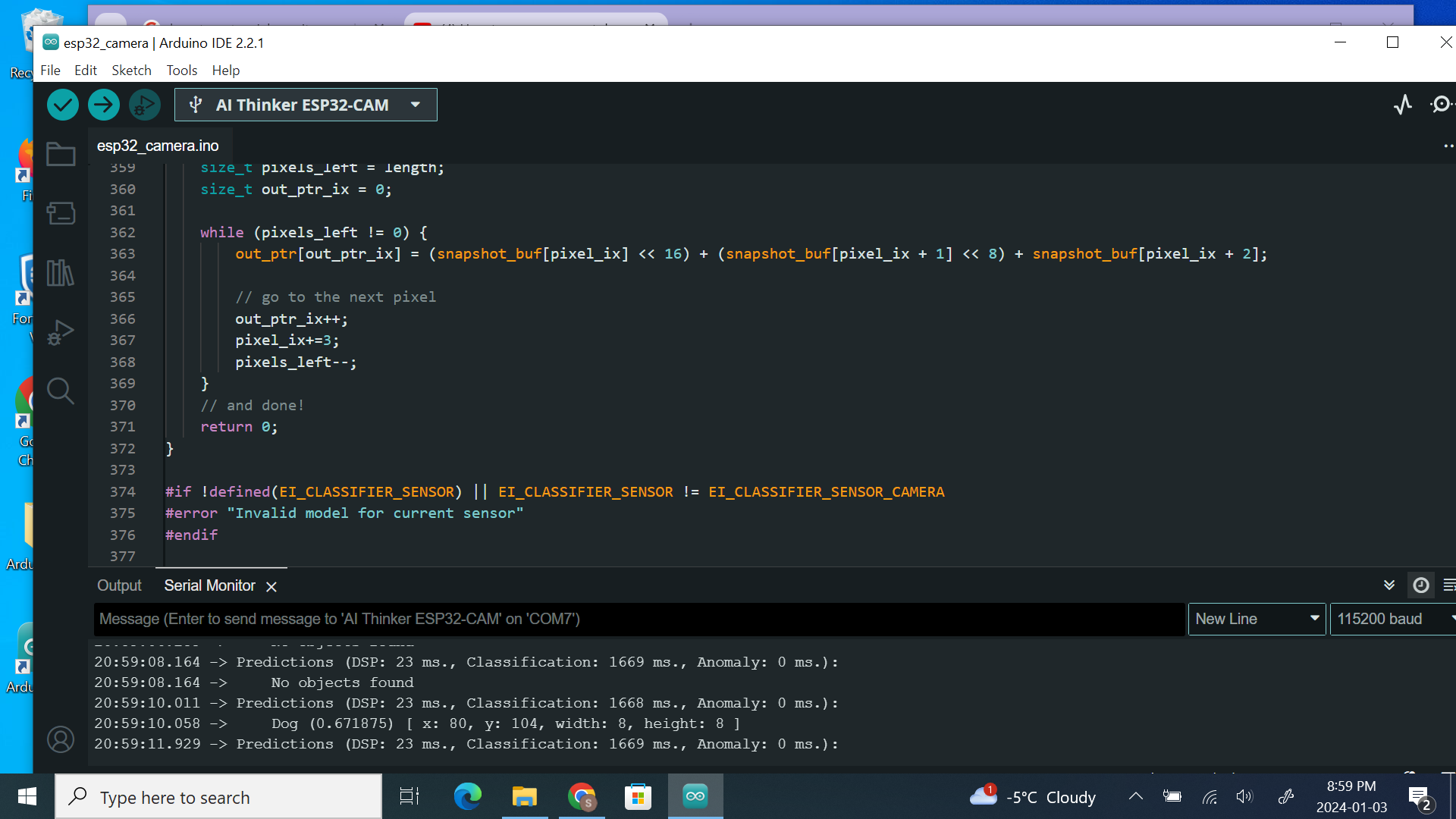

Next, to alert the user of any objects detected. A sound system that announces this was required. Originally, when an object was detected it would play an audio file stating which object was detected using a DF Player Audio Module, the file would be played on a pair of external speakers which would be later attached to the inside of the headphones. Unfortunately, it was realized that the ESP-32 Camera could not output enough volts that power the module that plays the audio file on the external speakers. Additionally, the MP3 module would have to have connected to the speakers leading to a more bulky and impractical shell. The speakers that would have been attached to the inside of the headphones would have been too uncomfortable against the ear of the user, therefore this solution would not have worked. After researching compatible apps with Arduino that allows a notification to be sent to the user's phone instantly when an object is detected, then the notification will be announced over the headphones. The most common way to send notifications to a phone from Arduino is an app called Pushsafer. Pushsafer is a service to send & receive instant push notifications on your phone, tablet or desktop. Through the API, Pushsafer can be integrated into almost any application. After troubleshooting, it was achieved that when an object was detected it would send a notification to the user’s phone. Unfortunately, the announcing notifications feature did not work because on iPhones it would only announce the notification if the user was using an Apple audio device such as AirPods. Since the headphones used were not made by Apple, this feature did not work. An alternative was to code a sound effect as the notification sounds instead of announcing the notification. Pushsafer had a set of custom notifications that could be added so that when a dog was detected, it would play a dog bark as the notification sound. When a human was detected, it would play a beep. When a car was detected, it would play a car horn sound effect and when a bike was detected it would play a bicycle bell noise. This still allowed the user to be alerted aurally when an object is detected.

The image on the left is how the notification shows up on the lock screen as it plays the notification, note that in the updated code the icon is moved from a bell, to whatever is being detected ex. dog, biker, human etc..

The image on the right displays how the notifications show in the actual app.

Here is how the app appears on the web version and the list of tones that you could use.

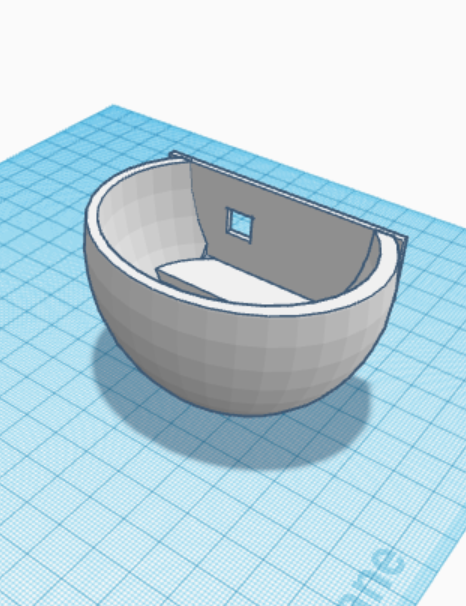

Before 3D printing an external shell that attaches to the headphones, the RGB was soldered and coded to the ESP-32 camera so that when an object is detected, it will light up a specific colour depending on the object. Next, for a power supply to power the cameras on the headphones, the solution thought of was to use small power banks that attach to the external shell, one power bank for each camera, so the small powerbanks were ordered, then were later attached. After designing a few drafts of the 3D print on TinkerCAD, the shell was printed and attached to the headphones holding all the electronic components.

This image shows the design that was custom made for the headphones on TinkerCAD, the window was designed for the camera window, and then it was 3D-printed into the physical formed shown in the next image. Then the other sides ear shell was printed.

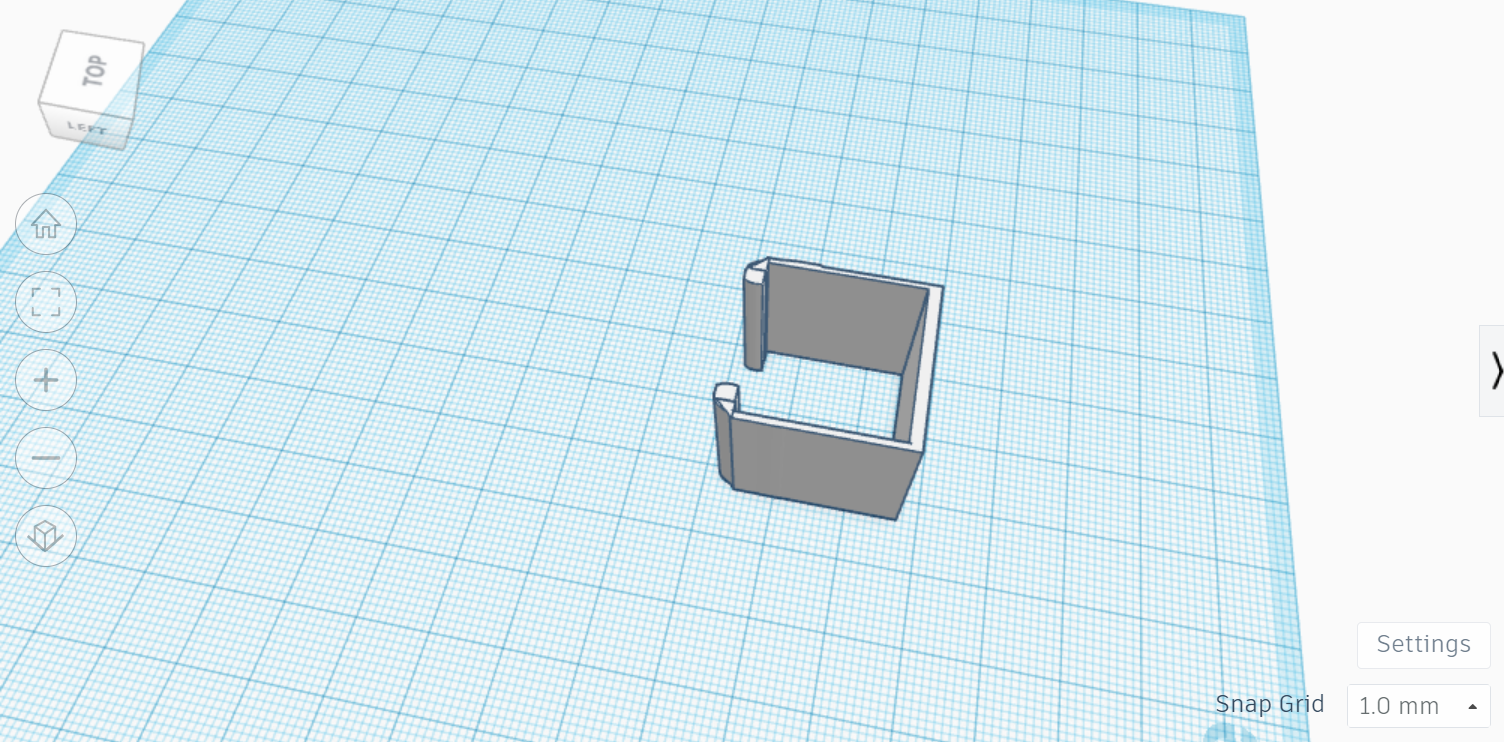

Since the power bank has to be inside the pockets, in the situation that the pants the user is wearing does not have pockets a clip was designed that attches to the end of the ear shell that holds the powerbank.

This is the design sketch for the clip on TinkerCAD.

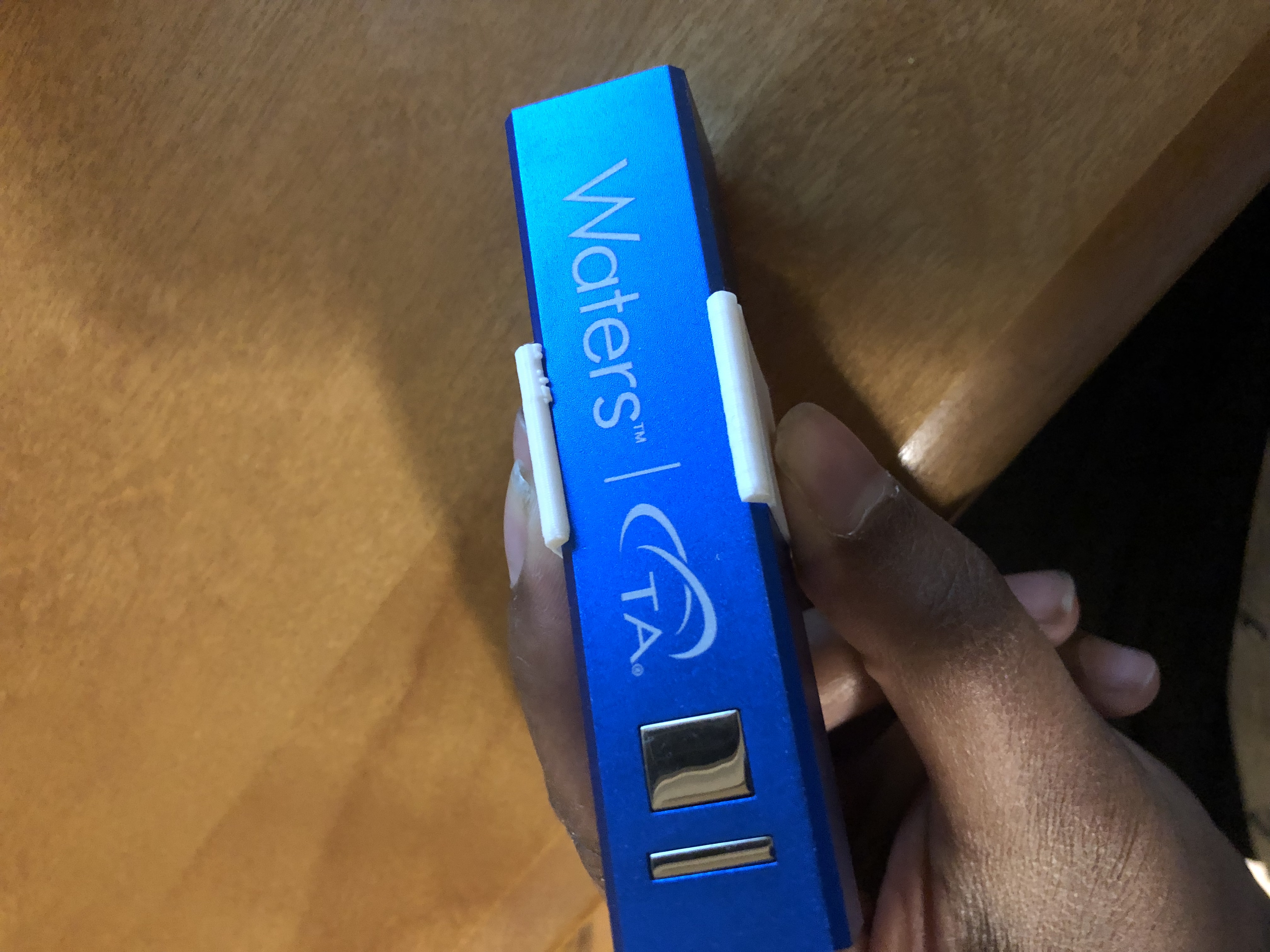

This is how the clip attaches on to the power bank once it was printed.

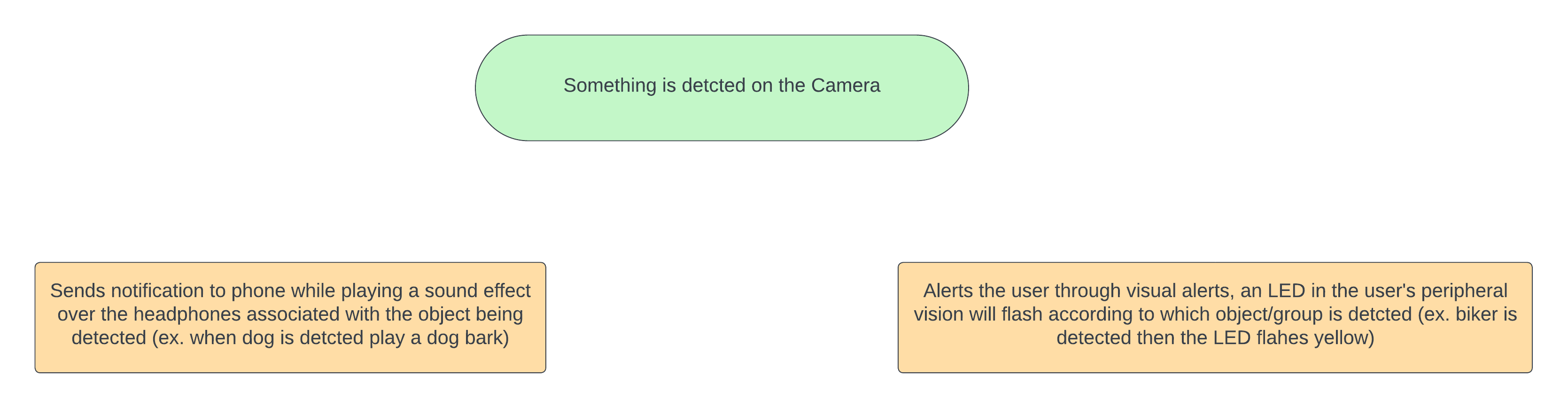

Summary of how it works:

THE FINAL PRODUCT:

Front view:

Back view:

Analysis

1. Performance Evaluation:

The implemented object detection system, which uses the ESP32-CAM and Edge Impulse, recognizes a variety of items with precision, including persons, dogs, vehicles, and bicycles. During the testing, the system regularly displayed reliable detection capabilities, improving the safety of vulnerable communities with an accuracy of 98.1% overall. The only difficulties found were primarily attributable to real-world differences in lighting conditions and object orientations.

Left: Accuracy of the camera containing dogs and humans Right: Accuracy of the camera containing bicyclists and cars.

2. User Experience and Accessibility:

Users have provided positive reviews on the usefulness of the headphones' integrated functions. Colour-coded visual indications make it simple and accessible for persons with visual impairments to communicate information. Furthermore, despite initial iPhone compatibility issues, the sound warnings efficiently notify users of discovered objects. The implementation of both visual and audible cues improves the overall user experience, which aligns with the project's purpose of prioritizing the safety of people.

3. Technical Challenges and Solutions:

Technical issues, such as voltage output restrictions from the ESP-32 Camera, which impacted initial audio file playback, were successfully overcome by using Pushsafer for notifications and bespoke sound effects. This adaptive technique not only fixed the problem but also provided a more varied and user-friendly solution by allowing notification sounds to be customized based on identified items.

4. Integration of Components:

The 3D-printed external shell seamlessly combines all electronic components into the headphones. The tiny design assures user comfort, and the positioning of small battery banks for each camera illustrates a sensible approach to powering the system. The effective integration of hardware components demonstrates the viability of deploying such safety-enhancing technologies in real-world circumstances.

5. Scalability and Future Improvements:

The current system provides a solid foundation for scalability, allowing for potential modification to various circumstances or environments. Future enhancements could concentrate on improving machine learning models for broader item detection and investigating more features for user customization. Some additional improvements could also include combining the headphone's battery and the camera’s battery into one battery to reduce the weight of the device and provide a more polished look.

6. Comparison with Existing Solutions:

Compared to existing options, integrating object detection into headphones offers a novel and more practical approach to pedestrian safety. This technology stands out from other technologies because it includes both visual and aural alarms, providing a comprehensive safety solution for people with disabilities. As other technologies solely focus on predicting and analyzing data from pedestrian collisions, which offer limited practicality in real-time safety enhancement.

The analysis shows the successful deployment of an innovative safety solution for vulnerable communities. The mix of hardware integration, user experience concerns, and adaptability distinguishes this project as a valuable addition to assistive technology for pedestrian safety.

Conclusion

In conclusion, it is possible to prevent pedestrian collisions and improve safety for pedestrians by enhancing headphones with object detection capabilities. By integrating an ESP32 camera with advanced machine learning algorithms, the headphones exhibited precise object detection, offering a crucial safety feature for individuals with visual impairments, pedestrians, school-commuting children, and the deaf or hard of hearing. The detailed analysis highlighted the system's accuracy, user-friendly design, and seamless integration of visual and auditory alerts. The inclusion of colour-coded visual indicators and customizable sound alerts showcased the practicality and accessibility of the proposed safety solution. This device not only advances assistive technology but also addresses the pressing need for innovative solutions to reduce the heightened risks faced by vulnerable populations. Future enhancements could concentrate on improving machine learning models for broader item detection and investigating more features for user customization. Some additional improvements could also include combining the headphone's battery and the camera’s battery into one battery to reduce the weight of the device and provide a more sleek look. The project's outcomes contribute to enhancing safety, promoting inclusivity, and setting the stage for future advancements in wearable technologies dedicated to prioritizing the well-being and safety of diverse pedestrian groups.

Citations

References

4imprint.ca (n.d.). Block Power Bank. 4imprint.ca. https://www.4imprint.ca/product/C129593/Block-Power-Bank/

DFRobot. (n.d.). DFPlayer - A Mini MP3 Player. DFRobot. https://www.dfrobot.com/product-1121.html

Disabled and low-income pedestrians at ‘higher risk of road injury.’ (2018, May 23). RoadSafetyGB. https://roadsafetygb.org.uk/news/disabled-and-low-income-pedestrians-at-higher-risk-of-road-injury/

DroneBot Workshop. (2023, June 25). Simple ESP32-CAM Object Detection. YouTube. https://www.youtube.com/watch?v=HDRvZ_BYd08/

GoForSmoke, & system. (2013, March). VS1053 module. Arduino Forum. https://forum.arduino.cc/t/vs1053-module/153441/6/

Hagberth, J., & Moreau, L. [Instant_exit, & louis]. (2022, November). Esp32 cam object detection to gpio high help. Edge Impulse Community. https://forum.edgeimpulse.com/t/esp32-cam-object-detection-to-gpio-high-help/5894/

How To Electronics. (2021, October 5). Object Detection & Identification using ESP32 CAM Module & OpenCV. YouTube. https://www.youtube.com/watch?v=A1SPJSVra9I/

m_hamza, er_name_not_found, & idahowalker. (2022, October). Esp32 cam vs esp32. Arduino Forum. https://forum.arduino.cc/t/esp32-cam-vs-esp32/1039980/

NHPoulsen, groundFungus, & outbackhut. (2022, August). Dfplayer mini sound module. Arduino Forum. https://forum.arduino.cc/t/dfplayer-mini-sound-module/1019242/2/

Randall, J. [Jonathan R]. (2023, January 18). ESP32 stereo camera for object detection, recognition and distance estimation. YouTube. https://www.youtube.com/watch?v=CAVYHlFGpaw/

Redman1972, & ChrisJ4203. (2022, February 6). Why can Siri only announce notifications using headphones. Apple Support Community. https://discussions.apple.com/thread/253643535?answerId=256821698022&sortBy=best#256821698022/

Renard, E. (n.d.). Arduino – How to Get a String from Serial with readString(). The Robotics Back-End. https://roboticsbackend.com/arduino-how-to-get-a-string-from-serial-with-readstring/

Santos, R., & Santos, S. (2016, December). Getting Started with the ESP32 Development Board. Random Nerd Tutorials. https://randomnerdtutorials.com/getting-started-with-esp32/

Santos, R., & Santos, S. (2019). ESP32-CAM Video Streaming and Face Recognition with Arduino IDE. Random Nerd Tutorials. https://randomnerdtutorials.com/esp32-cam-video-streaming-face-recognition-arduino-ide/

Schwartz, N., Buliung, R., Daniel, A., & Rothman, L. (2022). Disability and pedestrian road traffic injury: A scoping review. Health & Place, 77. doi:10.1016/j.healthplace.2022.102896/

SEN0305 HUSKEYLENS AI Machine Vision Sensor. (n.d.). DFRobot. https://wiki.dfrobot.com/HUSKYLENS_V1.0_SKU_SEN0305_SEN0336/

Techiesms. (2022, August 6). A compete guide to mp3 Module using Arduino & ESP32 board | DFPlayer Mini | Arduino Projects. YouTube. https://www.youtube.com/watch?v=cHGcoPfpP_w/

Wikipedia Contributors. (2023, November 4). STL (file format). Wikipedia, the Free Encylopedia. https://en.wikipedia.org/w/index.php?title=STL_(file_format)&oldid=1183473076/

Wikipedia Contributors. (2024, February 12). F-score. Wikipedia, the Free Encyclopedia. https://en.wikipedia.org/w/index.php?title=F-score&oldid=1206654723/

Xaxania, Robin, & anon57585045. (2020, April). Checking if a the serial monitor contains a String. Arduino Forum. https://forum.arduino.cc/t/checking-if-a-the-serial-monitor-contains-a-string/649212/

Acknowledgement

I want to extend my sincere appreciation to my school science fair supervisor for granting me the opportunity to be part of this incredible experience. Their guidance and encouragement have been indispensable. My deepest gratitude also goes to my friends and parents, whose unwavering support has been my constant motivation, and thank you CYSF 2024 coordinators for this opportunity.